Wow, indeed 🙂

Dr. Stuart Hameroff has discovered the mechanism envisioned by Roger Penrose. What I’m saying is astonishing: there is a structure in the brain that operates on the grain level of quantum gravity! Think about that for a minute. In all the experiments I have ever seen involving quantum processes, one major factor has been the extremely cold temperatures and highly insulated environments necessary to create and maintain observable quantum effects, as with the D-Wave computer, for example. Living tissue is far too warm and ‘noisy’ for the maintenance of quantum superposition coherency. Not so, according to Drs. Hameroff and Penrose.

Stuart Hameroff is an anesthesiologist as well as a Professor at the University of Arizona, Associate Director of the Center for Consciousness Studies, so consciousness is basically his middle name. In the course of his studies, he discovered that anesthesia seems to operate by disrupting a very specific type of electrical activity in the brain, the higher frequency gamma synchronies. These electrical potentials arise in a very different manner than the neuronal axonal spikes associated with dendritic chemical synapses.

Gamma synchrony, first of all, is a synchrony; that is, the neurons are not firing consecutively, as with the chemically induced synaptic spikes, but rather, simultaneously across several hundred thousand neurons at a time. How do they do this? It appears that there is another kind of synaptic junction in brain dendrites, in addition to the familiar chemical type involving neurotransmitters and electrolytes. Gamma synchronies travel as quantum superpositions, tunnelling instantaneously, not firing consecutively, through gap junctions, that is, directly conjoined portals in the dendritic membranes.

How these superpositions arise will be discussed, but first let us understand that this is not the typical kind of dendritic activity we all learned in grade school. Rather than an exchange of neurotransmitters at synaptic junctions across a space between the neurons, one by one, the gamma potential arises simultaneously in a large group of neurons and is shared directly from neuron to neuron through the gap junctions with no space in between:

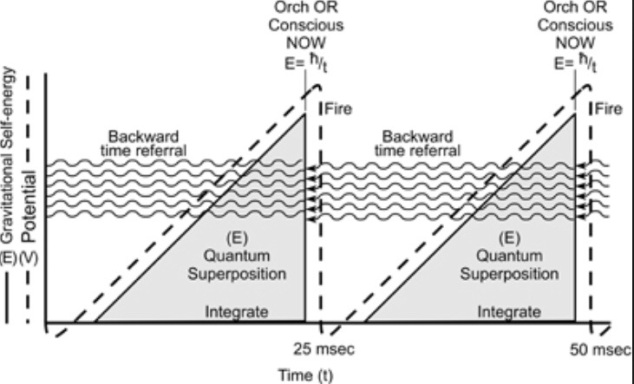

The gamma potentials arise from lattice structures inside the brain dendrites, microtubules, seen as the horizontal structures inside the circle in the diagram above. The diagram also shows the microtubules emitting the high frequency gamma waves and how the waves pass through the gap junction. These waves constitute the synchronies, which occur at a frequency of 40-80+ per second. Each superposition potential, each individual wave, is allowed to quantum tunnel because of the time symmetry allowed as the wave rises into the density matrix (nonlocality), thereby eliminating the need for consecutive spikes. This phenomenon was demonstrated in ‘Libet’s Case of Backwards Referral in Time,’ (Dennett, Consciousness Explained, 153-166) but denied because of the lack of an appropriate physical mechanism for backwards time referral of perception. Here we have it!

Now here’s where it gets weird.

Each microtubule is made of individual protein dimers called tubulin. The tubulin dimers arrange themselves in precise geometric patterns to form fibonacci spiral lattice tubes. Tubulin is a kind of protein that forms an aromatic ring so that the protein folds and creates hydrophobic pockets in the interior of the folds. These are electrically insulated areas, as water cannot enter and therefore, the noisy electrical interference associated with water cannot interfere with the more subtle electrical processes inside the hydrophobic pockets of the dimer. As I said, the dimers arrange themselves in a precise geometrical pattern so that their connections are regular and their mass energies consistent. This is important for the formation and maintenance of sustained coherent gamma potentials along the individual microtubules and among the microtubules en masse. The diagram below is a beautiful illustration of the geometrical order of a portion of a microtubule:

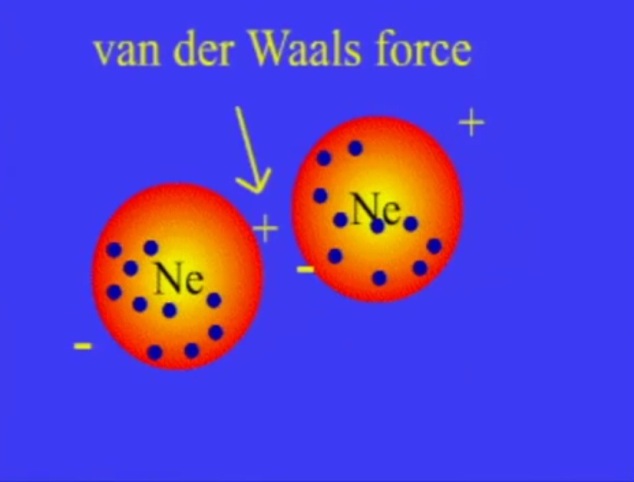

Inside the hydrophobic pockets there form magnetic dipoles, induced by adjacent dimers. The electron clouds inside one dimer repel the electrons in the neighboring dimer to produce an electronic dipole. This is called a London force, a type of van der Waals force. It is a quantum physical effect, not a chemical one. The result is a kind of electrical ‘switch’ in which the poles oscillate back and forth. The above diagram looks like a set of polarized tubulin dimers…that is, if you imagine you could actually see the London dipoles. These quantum forces may also exist in a state of superposition, that is, a state of being in both polarities at the same time. The diagrams below illustrate the London van der Waals force and how it creates the electrical switching potential among tubulin dimers.

The gamma potential, then, arises via the superposition state of London force dipoles, tunneling instantaneously through hundreds of thousands of neurons simultaneously. O.M.G. Hameroff calls this kind of network a hyperneuron or dendritic web.

The big problem with this theory is how the superposition resolves on its own, without an objective measurement or measurer. Penrose calls this phenomenon ‘objective reduction‘ and has coined the term ‘orchestrated objective reduction’ for the extremely organized and precise process occurring in the brain among the gorgeously, amazingly, mind blowingly beautifully arranged material substrate of the dendritic microtubules. As discussed in Part 1, the objective reduction of the tunneling gamma superposition wave occurs on the basis of the total mass energy involved in the superposition separation (i.e. tubulin mass), and the amount of time the superposition can be maintained, given a Planck length superposition separation distance: E=ℏ/t:

High amplitude gamma potentials resolve more quickly, resulting in a greater number of arguably more intense conscious moments per second (closer to 80, say), possibly with a resultant perception of time passing more slowly, exactly like increased frames per second in slow motion film photography.

This process of orchestrated objective reduction of gamma synchronies arising as quantum superpositions in dendritic microtubules is the production of conscious frames of reality, at a rate of 40-80+ per second:

Consciousness is a process in fundamental spacetime geometry, coupled to brain function.

Penrose suggests that Platonic information embedded in Planck scale geometry pervades the universe and is accessible to our conscious process.

~ Stuart Hameroff

Some thoughts on Part 2: on the basis of the above theory, it seems that our conscious process might work in concert with Planck scale geometry to create meaning. I would associate the gamma synchrony input as the ‘immediacy’ concept of Sartre and the further processing of this information as the ‘reflective’ process defined by Sartre. The conscious pilot described by Hameroff seems to be related to the ‘narrative center of gravity’ envisioned by Daniel Dennett.

Finally, what is the potential for quantum nanotechnology, the D-Wave chip, to run an algorithm for consciousness? Stay tuned for Part 3.